Modern quantitative systems rely on simulation, optimisation, and high-dimensional dynamics. These models often provide accuracy but encounter limits in speed, accessibility, and integration. Simu.ai supports organisations in converting these advanced models into fast, reliable, deployable components that enable pricing, forecasting, optimisation and decision frameworks across finance, energy and telecom.

1. Model Analysis and Problem Framing

A technical review ensures that the foundations of the model are well designed and suitable for downstream approximation.

This stage focuses on correctness and structure rather than implementation.

What this includes

- Examination of model equations, assumptions and numerical formulation

- Identification of symmetries, invariants and constraints that improve stability and reduce redundancy

- Verification that the model behaves consistently across inputs and remains well posed

- Clarification of the target outputs so the neural model is trained for precisely the right objective

Outcome

A mathematically sound and clearly defined model that is ready for dataset generation and neural approximation.

2. Dataset Generation

High quality neural models depend on high quality data. Training datasets are created to capture the full behaviour of the system while controlling numerical accuracy and computational cost.

What this includes

- Coverage of the input parameter space so the network generalises reliably

- Accurate numerical simulations using Monte Carlo, finite difference or custom environments

- Scalable generation pipelines using distributed cloud compute for large training sets

- Extraction of the statistics or state variables that best represent the model’s behaviour

Outcome

A clean, comprehensive and numerically consistent dataset that forms a solid foundation for neural model training.

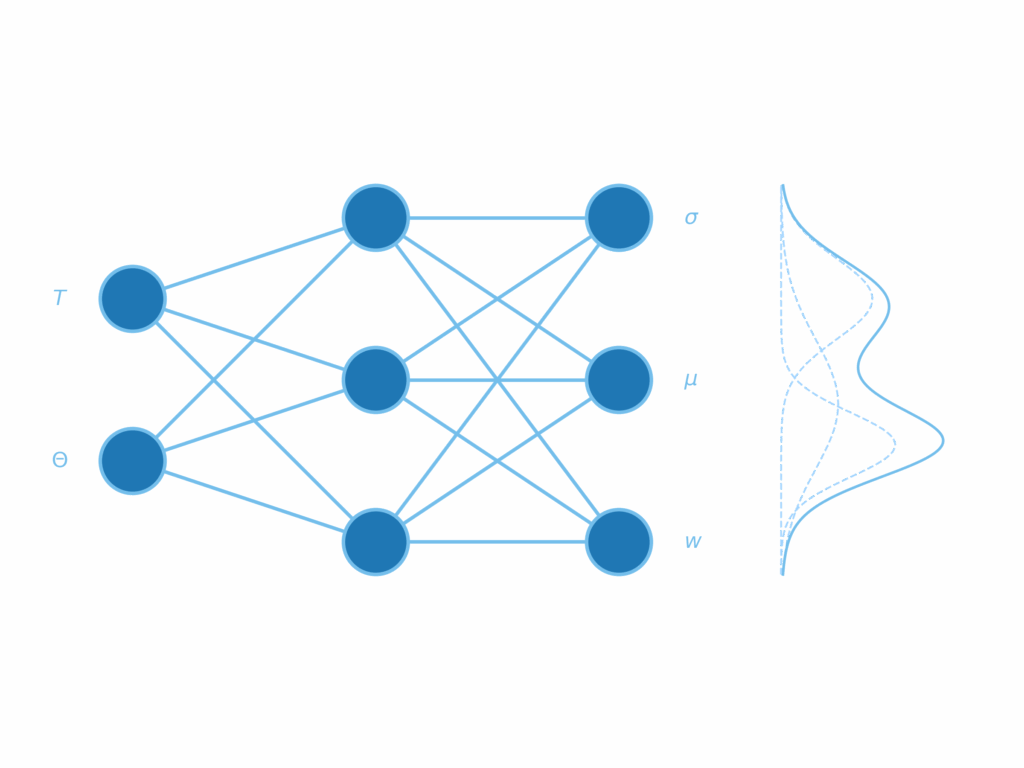

3. Neural Model Training

Neural models are designed to match the structure and accuracy requirements of the original system while reducing computational cost by several orders of magnitude.

What this includes

- Selection of suitable architectures and model capacity

- Choice of loss functions aligned with the final use case such as pricing accuracy or decision quality

- Training strategies that balance model size and performance

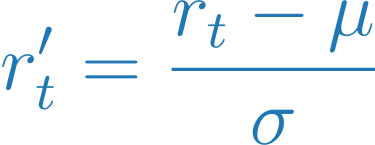

- Feature engineering and parameter transformations that improve stability and convergence

Outcome

A compact neural model that reproduces the behaviour of the original system with controlled accuracy and real-time performance.

4. Delivery and Integration

The final model is delivered in a format that integrates cleanly into existing workflows, with the necessary tooling to support production use.

What this includes

- Python Library

For integration with research, calibration or risk pipelines - Docker Image and REST API

For deployment inside enterprise environments with strict security or infrastructure boundaries - WebAssembly Model

For browser-based tools, real-time interfaces and zero-install distribution - Documentation

To support validation, maintenance and future extensions by internal teams

Outcome

A deployable, well-documented model ready for use in production or interactive tools.